Friday, October 27, 2006, 10:35 PM - dev

A thousand lines of code since I last wrote, and a few hundred away from finishing Packet Garden. It's a matter of days now.

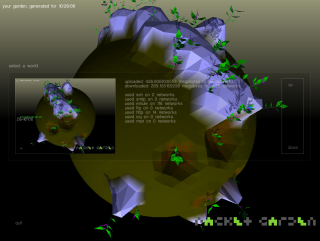

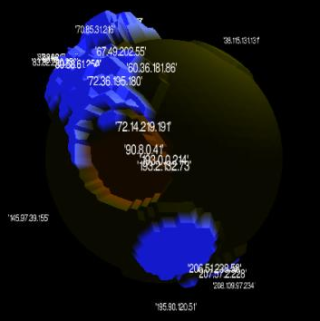

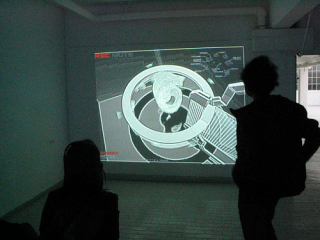

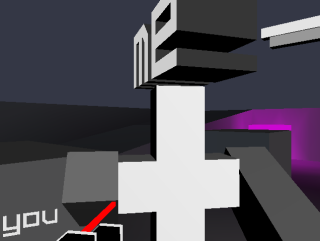

The UI code still needs some TLC - you can hear the bugs chirp at night - but there's now a basic configuration interface that saves out to a file and a history browser for loading in previously created worlds. Here's a little screenshot of the work in progress showing the world-browser overlay and the result of a busy night of giving on the eMule network.

Due to the vast number of machines a single domestic PC will reach for in a day of use I've had to do away with graphing whole unique IP's and am now logging and grouping IP's within a network range, meaning all IP's logged between the range 193.2.132.0 - 193.2.132.255 would be logged as a peak or trough at 193.2.132.255. This has exponentially dropped the total generation time of a world, including deforming the mesh and populating the garden world with flora. While I saw it as a compromise at first actually closer to an original desire to graph 'network regions'; in fact I could even go higher up and log everything under 192.2.255.255.

| permalink

Thursday, September 14, 2006, 12:35 AM - dev

.. well, sort of.

Packet Garden is coming along nicely, though there are still a few challenges ahead. These are primarily related to building a Windows installer, something not my forte as I primarily develop on and for Linux systems. Packet capture on Windows is also a little different from that of Linux, though I think I have my head around this issue for the time being. So far running PG on OSX looks to be without issue. I'd like to see the IP's resolved back into domain names, so that landscape features could be read as remote sources by name. While in itself possible it's not something that is done fast enough as each lookup needs to done individually.

One thing I've been surprised by in the course of developing this project is just how many unique IP's are visited by a single host in the course of what is considered 'normal' daily use. If certain P2P software is being run, where connections to many hundred machines in the course of day is not unusual, very interesting landscapes start to emerge.

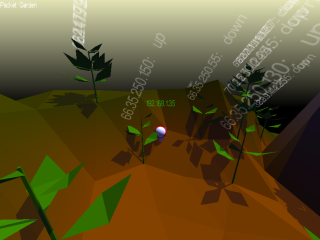

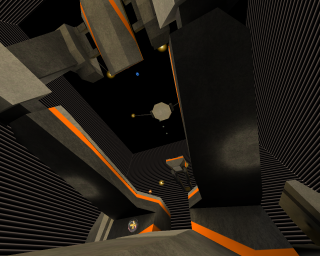

Basic plant-life now populates throughout the world, each plant representing a website visited by the user. Common protocols like SSH and FTP will generate their own items, visible on the terrain. Collision detection has also improved, aiding navigation, which is done with the cursor keys. I've added stencil shadows, a few other graphic features and am on the way to developing a basic user interface.

Previously it took a long time to actually generate the world from accumulated network traffic, but this has since been resolved thanks to some fast indexing and writing the parsed packet data directly to geometry. The advantage of the new system is that the user can start up Packet Garden at any point throughout the day and see the accumulated results of their network use.

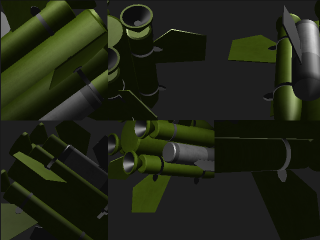

Here are a few snaps of PG as it stands today, demoing a world generated from heavy HTTP, Skype, SoulSeek and SSH traffic over the course of a few hours. More updates will be posted shortly.

Tuesday, August 15, 2006, 10:26 PM - dev

Mid this year the Arnolfini commissioned a new piece from me by the name of Packet Garden. It's not due for delivery yet (October), but here are a few humble screenshots of early graphical tests.

I'll put together a project page soon, but in the meantime, here's a quick introduction.

A small program runs on your computer capturing information about all the servers you visit and how much raw data you download from each. None of this information is made public or shared in any way, instead it's used to grow a little unique world - a kind of 'walk-in graph' of your network use.

With each day of network activity a new world is generated, each of which are stored as tiny files for you to browse, compare and explore as time goes by. Think of them as pages from a network diary. I hope to grow simple plant-like forms from information like dropped packets also, but let's see if I get time..

I'm coding the whole project in Python, using the very excellent 3D engine, Soya3D.

Here's a gallery which I'll add to as the project develops.

Saturday, July 29, 2006, 02:24 PM - live

The HTTP Gallery in London is now showing my Second Person Shooter prototype in a show by the unassuming name of Game Play. Let's hope the little demo doesn't crumble under the weight of a thousand button-mashing FPS fans..

As soon as my commission for the Arnolfini is complete and shipped, I'll finish the _real version_. Promise.

Monday, July 24, 2006, 06:08 PM - log

The slightly unweildy title is "

Buffering Bergson: Matter and Memory in 3D Games, soon to be published in a collection of essays called 'Emerging Small Tech' by The University of Minnesota Press.

The paper was actually written some time ago and I've been meaning to upload papers I've written to this blog. Let this be the first.

Tuesday, July 11, 2006, 09:55 PM - live

This little sketch continues my ongoing fascination with multiple viewports, for both the visual compositional possibilities and for the divided object/subjecthood produced. I spent most of a day working on 'trapped rocket', exploring the use of viewports to contain their subject.

In this experiment I've built a 'prison' out of six virtual cameras, containing an aggressive object, a rocket trying to get out. All cameras are orthagonal to the next looking inward, in turn producing a cube. Together all six cameras jail the rocket as it toils forever trying new trajectories indefinitely.

The 3.2M non-interactive, realtime project runs on a Linux system and is downloadable here. You'll need 3D graphics accelleration to run it. I wrote a script that took a gazillion screenshots and so here's the overly large gallery that inevitably resulted. Here's also a 7.4M, 1 minute clip in the Ogg Theora format.

Sunday, July 9, 2006, 04:43 PM - log

For those of you that weren't there, here are the lecture notes, in HTML, of the first half of my day-class at the FAMU in Prague. Thanks to CIANT and to the students for a fun and productive day.

Sunday, July 9, 2006, 03:36 PM - ideas

Marta and I just returned from Prague. One thing that struck us there was the large amount of time we spent in other people's photographs and videos, or at least within the bounds of their active lenses.

It's sadly inevitable that at some time a tourist mecca like Prague will capitalise on this, introducing a kind of 'scenic copyright' with a pay-per-click extension. Documenting a scene, or an item in a scene, would be legally validated as a kind of value deriving use, much in the same way as paying to see an exhibition is justified. Scenic copyright already exists, largely under the banner of anti-terrorism (bridges, important public and private buildings fall under such laws), but there are cases where pay-per-view tourism is already (inadvertently) working.

A friend Martin spoke of his experiences in St. Petersberg, where people are not allowed to photograph inside the subway, something enforced under the auspice of protecting the subway from terroristic attack. However for a small fee you can be granted full right to take photographs. Being that it's very expensive to have city wardens patrolling photographers it will no doubt be automated, where Digital Rights Management of a scene would be enforced at a hardware level.

Here's a hypothetical worst case. Similar to the chip level DRM in current generation Apple computers (with others to follow), camera manufacturers may provide a system whereby tourists must pay a certain fee allowing them to take photographs of a given scene. If a camera is found to be within a global position falling under state sanctioned scenic-copyright, the camera would either cease to function at all or simply write out watermarked, logo-defaced or blank images. If you want a photo of the Charles Bridge without "City of Praha" on it, you'll have to pay for it..

Sunday, July 9, 2006, 01:32 PM - ideas

A newspaper reporting on a blind convention (a standard for blindness?) in Dallas talks about a text to speech device allowing blind people to quickly survey text in their local environment, further refining their reading based on a series of relayed choices. The device works by taking photographs of the scene and in a fashion similar to OCR, extracts characters from the pixel array, assembles them into words and feeds them to software for vocalisation.Given the vast amount of text in any compressed urban environment, prioritising information would become necessary for a device like this to deliver information while it's still useful or else utility would be largely lost. The kind of text I'm talking about would include road-signs and building exit points.

Perhaps a position aware tagging system could be added to important signs using triangulated position from multiple RFID tags, bluetooth or other longer range high-resolution positioning system. Signs could be organised by their relative importance using 'sound icons', which in turn are binaurally mixed into a 3D sound field and unobtrusively played back over headphones (much as some fighter pilots cognitively locate missiles). The user would then hear a prioritised aural map of their textual surrounds before selecting which they should first read based on assessment of their current needs.

Thursday, June 29, 2006, 02:53 AM - dev

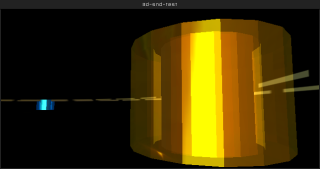

'Tapper' is a small virtual sound installation exploring iterative rhythmical structures using physical collision modeling and positional audio.

Three mechanical arms 'tap' a small disc, bouncing it against the ground surface. On collision each puck plays the same common sample. Because the arms move at different times polyrhythms are produced. The final output is mixed into 3D space, thus where you place yourself in the virtual room alters the emphasis of the mix.

I'll make a movie available soon and add it to this post.

Tuesday, June 27, 2006, 12:45 AM - log

many of you have written to me about the Blender --> PureData HOWTO i wrote up last year and how you've used it in your own projects. it's great to see this simple tute forming the basis of a few workshops and university classes now also (here's looking at you Andy!).it seems the Wiretap site is currently down - and has been for a while. for this reason you'll probably be missing an essential ingredient of the tutorial, the pyKit Python -> OSC interface libraries.

because i'm very nice, i've archived them here until they're back online.

Sunday, June 25, 2006, 08:55 PM - howtos

Beagle is an 'instant search' tool for Linux systems, and normally works in conjunction with the Gnome Desktop, which I don't run. being able to instantly find files from the commandline is thus something i've wanted for a while. here's a simple howto for those of you that'd like instant search from the CLI. it assumes you have a Debian based system:

sudo apt-get install beagle

create a beagle index directory:

mkdir ~/.beagle-index

index all of $HOME:

sudo beagle-build-index --target ~/.beagle-index --recursive ~/

start the beagle daemon as $USER. it automatically backgrounds:

beagled

run 'beagle-query' in the background so that it looks for live changes to your indexed file system.

beagle-query --live-query &

now create a file called 'foobar' and search for it with:

beagle-query foobar

the result should instantly appear.

it may be better to alias it as 'bfind' or something in ~/.bashrc and start 'beagled' and 'beagle-query --live-query &' from ~/.xinitrc or earlier.

enjoy

Friday, June 2, 2006, 12:58 PM - live

it's in the Ogg Theora format (plays with VLC), is 84M slim and has a couple of minutes playing time.

this video is pretty much a record of the piece as it was exhibited at Lovebytes06, the game autoplaying continuously for two weeks.

there is no human input in this work, four bots fight each other, dying and respawning, over and over again. one of the four bots sends all of it's control data to PD which in turn is used to drive a score. it's through the ears of this bot that we hear the composition driven by elements such as global-position in the map, weapon-state, damage-state and jump-pad events. For this reason game-objects and architectural elements were carefully positioned so that the flow of combat would produce common points of return (phrases) and the orchestration sounded right overall.

The scene was heavily graphically reduced so as to prioritise sound within the sensorial mix.

we have a multichannel configuration in mind for the future, whereby each bot is dedicated a pair of channels in the mix. the listener/audience would then stand at the center. perhaps we would remove video output altogether, so that all spatial and event perception was delivered aurally.

get it here.

Thursday, May 25, 2006, 11:41 PM - live

it's 40M and in the Ogg Theora format. if you don't know what that means just use the VLC player.

get the video here.

if you can deal with Flash, here's a YouTubed version.

Sunday, May 14, 2006, 02:16 AM - dev

thanks to a generous commission from Cybersonica, pix and I had the resources we needed to throw a month at creating an entirely new version of fijuu, the engine, artwork and audio got a complete overhaul.

development in such a short period of time was also testimony to the power of skype as a collaboration tool. we spent around 8-10 hours in the IM each day sending each other patches, artwork, code snippets etc right up until the point of walking out the door with Fijuu and Ubuntu on a shiny new Shuttle. if only Skype or an equivalent IM and VoIP tool had code-formatting and an sketch-block with drawing tools and SVG export..

the piece was installed at Phonica in London where it'll be on show until the 26th, alongside sister shows around London.

here are some screenshots of the finished work and most imporantly here's the sourcecode if you're at all interested in compiling it:

pix has put together a README listing dependencies. don't leave home without it!

darcs pull http://fijuu.com/darcs

Thursday, April 13, 2006, 07:21 PM - howtos

fellow Kiwi Adam Hyde just sent through some snaps of a little workshop conducted in Lotte Meijer's house in Amsterdam over the snowy new-year.

we spontaneously decided to make TV transmitters according to a plan devised by Tetsuo Kogawa, a mate of Adam's. Adam and Lotte had the gear, so we got busy.

Marta, Adam, Lotte and I each soldered a board up, but Lotte's worked the best. the board has a little variable resistor for tuning channels and to our complete astonishment gave us a near perfect colour reception with a composite cable from a DVCam hardwired onto the board. we got around 10m of range between the transmitter and the TV before the signal was lost due to occlusions in the house itself. with a few boosters used in serial this range would be (hypothetically) exponential.

fun and games - build one yerself!

Thursday, February 16, 2006, 05:07 AM - dev

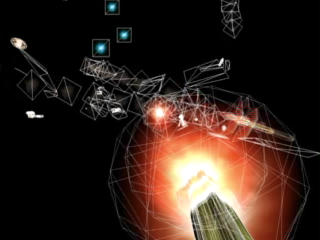

Thanks to Ed Carter and the Lovebytes folk, q3apd is finally getting a good showing in an installation context; to date it's largely been appreciated in a performance setting. Unlike prior appearances, q3apd will this time be played entirely by bots which is so far proving to be an interesting challenge.

While these bots are in mortal combat they are generating a lot of data. q3apd uses this data to generate a composition, in essense providing a means to 'hear' the events of combat as a network of flows of influence.

To do this, every twitch, turn and change of state in these bots is passed to the program PD where the sonification is performed; agent vectors become notes, orientations accents and local positions become pitch. Events like jumpads, teleporters, bot damage and weapon switching all add compositional detail (gestures you might say).

Once the eye has become accustomed to the relationships between audible signal and the events of combat, visual material can be sequentially removed with a keypress, bringing the sonic description to the foreground.

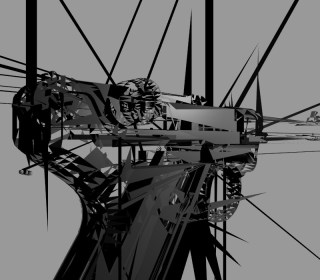

I've been working on a new environment for the piece and helping bots come to terms with the arena. Here's a preview shot. When the work goes live I'll post a video.

Thursday, February 2, 2006, 10:42 PM - dev

i've been working on a series of moving images designed for use on very large screens.

anagram 1

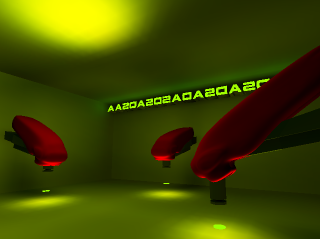

this composition takes about 10 minutes to complete a cycle and runs indefinitely. it's called "anagram 1" and is designed as an 'recombinational' triptych. it is not interactive in any way.

this is the binary [2.5M]. it runs on a Linux system with graphic acceleration. just run 'anagram1' after unpacking the archive.

here's a small gallery of screenshots.

anagram 2

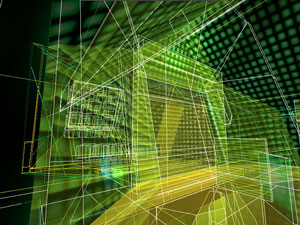

"anagram 2" takes much longer than the previous work to complete a cycle and spreads the contents of three separate viewports from one to the next to create complex depths of field. using orthoganal cameras, cavities compress and unfold as the architecture pushes through itself. the format of anagram2 is also natively larger.

here's the binary [2.4M]. it runs on a Linux system with graphic acceleration. run 'anagram2' after unpacking the archive.

here's a gallery of anagram 2.

Thursday, February 2, 2006, 10:18 PM - dev

2002-3

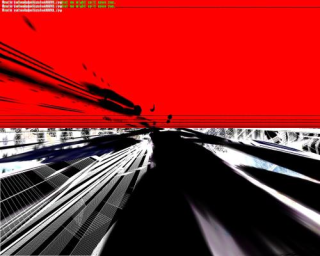

q3apaint uses software bots in Quake3 arena to dynamically create digital paintings.

I use the term 'paintings' as the manner in which colour information is applied is very much like the layering of paint given by brush strokes on the canvas. q3apaint exploits a redraw function in the Quake3 Engine so that instead of a scene refreshing it's content as the software camera moves, the information from past frames it allowed to persist.

As bots hunt each other, they produce these paintings. turning a combat arena into a shower of gestural artwork.

The viewer may become the eyes of any given bot as they paint and manipulate the brushes they use. In this way, q3apaint offers a

symbiotic partnership between player and bot in the creation of artwork.

The work is intended for installation in a gallery or public context with a printer. Using a single keypress, the user can capture a frame of the human-bot collaboration and take it home with them.

Visit the gallery.

Thursday, February 2, 2006, 10:11 PM - code

Some fun to be had. To start with try this:

import ossaudiodev

from ossaudiodev import AFMT_S16_LE

from Numeric import *

dsp = ossaudiodev.open('w')

dsp.setfmt(AFMT_S16_LE)

dsp.channels(2)

dsp.speed(22050)

i = 0

x = raw_input("length: ")

x = int(x)

a = arange(x)

while 1:

# between 200 and 600 is good

while i < x:

i = i +1

b = a[i:]

dsp.writeall(str(b))

print i,":",x

else:

while i !=0:

i = i -1

b = a[i:]

dsp.writeall(str(b))

print i,":",x

Thursday, February 2, 2006, 10:10 PM - code

it's harder than you think!

here it is in python ported from some Java i found online.

Thursday, February 2, 2006, 10:10 PM - code

here's a wifi access point brower i wrote in python for Linux users that prefer to use console applications. i

'll get around to one that roams and pumps based on the best offer.

scent.py

Thursday, February 2, 2006, 10:09 PM - code

here's a little python script to let you know when your laptop battery is running low.

dacpi.py

Thursday, February 2, 2006, 10:08 PM - dotfiles

this rc resources pyzor, spamassin, procmail and gnupg.

~/.muttrc

Thursday, February 2, 2006, 10:07 PM - howtos

i've been playing around with creating little demo's in blender that explore it's limits for positional (3D) audio. as it goes the limits a

re near and not very interesting. regardless it's perfectly possible to create installations using point based audio. i'm working on a series of

these now. in the meantime here's a little HOWTO blender file..

PKEY in the camera window to play. ARROWKEYS to move, LMB-click and drag to aim (walk/aim stuff nicked from a blender demo file).

for those without blender, but just want to see the results, here is a Linux binary

. just make it executable (with KDE/Gnome or chmod +x file) and click or ./file to play.

Thursday, February 2, 2006, 10:07 PM - dev

2005

the first person perspective has always been priveledged with the pointillism (or synchronicity) of a physiology that travels with the will in some shape or form, "I act from where I perceive" and "I am on the inside looking out". in this little experiment however, you are on the outside looking in.

in this take on the 2nd Person Perspective, you control yourself through the eyes of the bot, but you do not control the bot; your eyes have effectively been switched. naturally this makes action difficult when you aren't within the bot's field of view. so, both you and the bot (or other player) will need to work together, to combat each other.

there is no project page yet, but downloads and more information are available here.

Thursday, February 2, 2006, 10:06 PM - dev

1999-2000

this was my first successful foray into the use of games as performance environments.

it project uses a modified QuakeII computer gaming engine as an environment for mapping and activating audio playback, largely as raw trigger events. it

works well both live and as an installation. I later implemented this project in Half-Life.

the project webpage, and movie are here.

back next