Monday, October 15, 2007, 06:25 PM - dev

I've just finished the first beta (really an alpha) of my little AR/tangible-interface game levelHead. Admittedly there's not much up on the project page yet, but here's a YouTube video that conveys the general idea pretty well. It still has glitches but i'll iron those out soon enough.

At some point i also want to look into the idea of using invisible markers (have a few promising possibilities there) or full colour picture markers (also possible, though requires much more CPU braun).

Here's a better quality video in the OGG/Theora format (plays in VLC).

Enjoy.

| permalink

Thursday, August 2, 2007, 06:09 PM - dev

Here are packages of Packet Garden for Ubuntu 7.04.

To install just download, double-click and go. You might want to install dpkt and pycap first (also found at the above link).

Tuesday, July 31, 2007, 11:37 AM - code

Dilemma: Hotel in foreign country and must wake up very early. Phone critically low on battery, charger missing, hotelier appears to be asleep and no alarm clock in sight. Very tired, reasonably inebriated.

Fix: Write a script that emulates the sound of my phone's alarm before passing out:

# simple alarm script.

# requires the program 'beep'

# turn up your PC speaker volume and use as follows:

# 'python alarm.py HH:MM'

import time

import sys

import os

wakeTime = sys.argv[1].split(':')

while 1:

time.sleep(1)

if time.localtime()[3] >= int(wakeTime[0]):

if time.localtime()[4] >= int(wakeTime[1]):

os.popen('beep -l 40 -f 2000 -n -l 40 -f 10000 -n -l 40 -f 2000')

Monday, June 18, 2007, 03:56 PM - dev

Aside from moving country I've just finished developing a project at Interactivos at the excellent Media Lab Madrid. I tried to spend as

much time as possible there but alas had chores like setting up a new apartment. Nonetheless I had a lot of fun.

Simone Jones was one of the instructors - someone who has a great deal of experience with electronics, especially in the context of motorised cameras. Because my previously offered project proved to be unfeasible in the time frame and Simone wanted to work on something, we decided to team up.

We threw around several ideas, mostly to do with 'editing' the existing architecture of the exhibition space by adding an extra room seen only through a CCTV like display - a kind of a haunting. However, as the lighting conditions of the space were changing so frequently in the days leading up to the group-show, we couldn't pull this off. For this reason we decided to work small - really small.

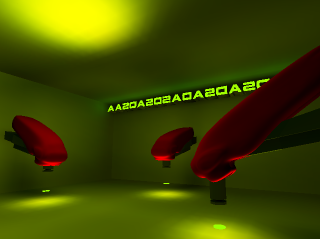

The idea was simple, augment a solid cube with 6 little rooms such that the cube becomes a tangible interface for navigating through an architecture: a mind-game - "How are the rooms connected?"

I added some code to ARToolkit so that it could support occlusion - ie hiding virtual objects 'behind', or 'inside', real objects and used a simple mask object to aid the process.

Here's a little clip in the OGG Theora format (plays in VLC) that perhaps better explains it all.

Simone and I are already talking about a large version of this for a later show. In the meantime I'm adapting it into a small game where you must help a character to escape the block by leading it from room to room: by turning the cube you select the next room the character will enter. Several cubes can be used so that when a character is finally led to the exit door of one cube it will jump to the entrance of another cube (or 'level') placed nearby. I plan to make this puzzle game around 5 cubes long. More about that later..

The exhibition uses a Sony EyeToy on an Ubuntu Linux system. Worth mentioning is that I used the super Rastageeks OV51x-JPEG drivers: a 640x480 webcam on Linux for less than EUR40? Look no further!

Addendum 19-06-07 For a long list of reasons I have never found character animation a very satisfying task - probably due to me being quite horrible at it. For this reason I'm very open to collaborating with a good character animator on this project. The data needs to come from Blender via the osgCal3D exporter (shipped with recent versions of Blender).. Get in touch we me by interpreting this image.

Sunday, May 20, 2007, 06:52 PM - ideas

In the last few years quantum physicists and mathematicians have told us there may well be ground to the old "many worlds" theory - that there might be several different versions of any given dimension, or groups of dimensions, at the same time. Hugh Everett III is perhaps the most well known proponent of this theory.

Perhaps a many worlds gaming system would involve several players with the task of governing one simulated world each. Each world starts out with an equal number of objects and agents all of which begin as perfect temporal copies of the next. Gameplay might involve triggering/steering chains of events to the ends of creating the least synchronous world - ie. sequences of highly unlikely events. The world with the least eventful similarity within a given period of time will create a branch, and that player wins. At the point of a branch, identical copies are made and the game begins again, continuing from the point of that new branch.

Perhaps the notion of 'entanglement' could also be used as a strategic means of playing great similarity to an advantage: by successfully mirroring an event in another player's world entanglement could be triggered, giving the antagonist brief remote control over events therein.

While it could easily take on the form of a 2d game or orthographic sim-like title (like Habbo Hotel) the real work would be in creating a procedural event modeling system with an internal sense of consequence and wide potential for very absurd outcomes. Scenarios for an opening game need not be large at all - ordering a falafel or getting a haircut could give plenty of material to begin with.

12-05-07 Updated for clarity.

Saturday, May 5, 2007, 02:30 PM - ideas

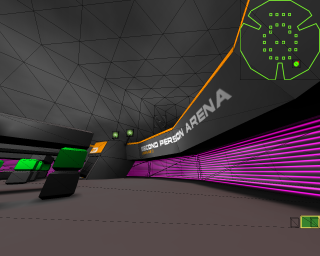

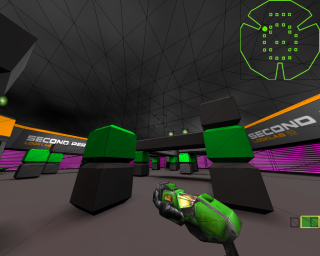

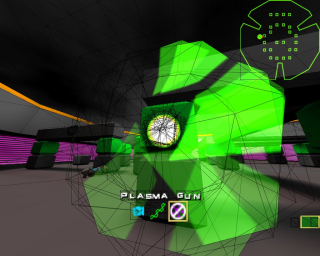

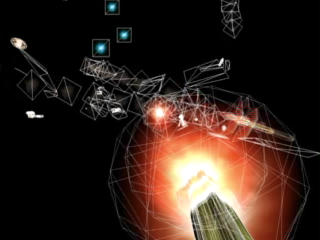

Any time I had leading up to Gameworld was spent working on 2ndPS2 (read Second Person Shooter for 2-players). I'd been meaning to make this little mod for years and decided that Gameworld was as good an opportunity as any to put the idea to the test.Unlike the previous incarnation - a simple prototype written in Blender that far too many people got excited about - your views are switched with another player, not a bot. You are looking through their view and you through theirs. When they press the key for forward on the computer, the view you're looking through responds accordingly, and vice versa. As it's all networked it's possible to play over the internet just as you would a normal multiplayer Quake3 game.

Naturally this makes it very tricky to actually play the thing as you can only navigate yourself with effect when you can see yourself: ie. you are within view of your opponent's gaze. In the few tests I did of 2ndPS2 before putting it on show people with no experience playing first-person-shooter games struggled with this reversal of the control paradigm very much, and so at the advice of Marta I built a sort of visual radar system so you could see where the other player was and vice versa.

This worked really well as far as reducing the confusion people would've had otherwise: in an exhibition context of the scale of Laboral people have very short attention spans and so a bang-for-buck approach like this was perhaps necessary. In practice it actually stood up reasonably well to these ends.

Conceptually however using this radar-helper is a bit of a compromise: why switch the views at all if you're providing a means for people to avoid engaging with a primary dislocation of perspective as an active part of the interface?

With this in mind I've decided to replace the visual radar with a sound-based system. You can hear where you are in the scene in relation to the view of your opponent - the view you're looking through. Events like walking into walls and picking up items are distinct sound events. The orientation of yourself out there in the scene is represented as changes to the pitch and harmonics of a continuous signal.

While I already had much of this auditory feedback system already implemented I didn't use it at Laboral as it was far from ready for use.

I used ioquake3 to make 2ndPS2, spending a fair bit of time coming up with new rendering effects, sprites, weapons and other bits and bobs simply because I can't help myself when I have the source code in front of me (ahh the Garden Paths). Admittedly I could've simply taken a stock Quake3 map and consdered this strictly as a conceptual piece, but when I started this I had the distinct feeling that I was beginning something much larger. Perhaps this is still the case.

Where to from here? Perhaps a mod that allows many people to play simultaneously; a hoppable second-person view matrix allowing you to change to any view other than your own. There would be a strategic component where views themselves are resources that need to be managed toward the ends of finding yourself in the arena long enough to gain control, bumping others over to a new view as required. Weapons could include a POV-grenade that shuffles all the current views of players within impact range. FOV-weapons (i've already made a couple) that suddenly throw the target into orthogonal views or warp the current camera as though the world were a rippling surface.

This sort of stuff I wanted to save for another project entirely - a strategic multiplayer game where by you must find your first-person view in a large architecturally distributed view matrix - but Eddo suggested it would probably make a pretty nice addition to 2ndPS2.

Perhaps I will do this.. I'm always open to other suggestions and even collaborations.

Sunday, April 8, 2007, 03:56 AM - live

Here are a few galleries, broken up into categories based on when the images were taken during the cycle of action. I think what's in here is a little more interesting than what's seen in the earlier video as it also gives coverage of some live palette manipulation.

beginnings

fields

instants

endings

Monday, March 19, 2007, 11:23 PM - dev

The piece I made while serving as Artist in Residence at Georgia Tech finally concluded to be 'ioq3aPaint'; an automatic painting mechanism using QuakeIII where software agents in perpetual combat drag texture data as they fight, rendering attack vectors as graphic gesture. Here's a short clip (64M, 4"50', Ogg Theora) of one of the many iterations. It will play in VLC:The exhibition was breif but the opening night and talk brought many thoughtful questions. Game designer and theorist Michael Nitsche was responsible for alot of great commentary, some of which he wrote about here.

ioq3aPaint develops upon q3aPaint quite heavily, introducing a fresh palette and providing audiences with the ability to cycle through palettes in real time.

Not far off is the ability to send screenshots straight to a printer; the idea being that on the opening night of a future exhibition audience could take screenshots while the abstractions evolve which are in turn sent off to a large format canvas printer. The show itself would continue the following day as a normal painting exhbition.

If you're interested in playing around with QuakeIII as a painting tool you can get fairly far working only in the console. Play with

r_fov, r_drawWorld and r_showTris especially once you've 'team s' and there are a few bots in the scene. Therein start manipulating GL functions in code/render/ and drive them by adding new keybinds to code/client/cl_input.c.A big thanks to all those in the LCC department for making it happen - an extra special thanks to Celia Pearce for setting up the initiative in the first place. Celia is one of the few people really pushing experimental game development practices in both institutional and industry contexts, and has been doing so for some years. Cheers to that.

Saturday, March 17, 2007, 02:05 AM - live

while teaching at Georgia Tech i've been in the company of some big screens, so i took the opportunity to film a long overdue clip of Fijuu2 in use.

we'd hoped for an inset of the gamepad but i didn't have access to two cameras at the time. regardless, this clip should explain what it's all about.

get it here. It's in the Ogg Theora format. If you don't know what that is, just use VLC.

Saturday, March 3, 2007, 09:30 PM - code

While here at Georgia Tech I'm giving a class on the development of 'expressive games', and for the purpose I chose Nintendo Wiimotes as the control context for class designs. The final projects will be produced in Blender, using the Blender game engine.

While here at Georgia Tech I'm giving a class on the development of 'expressive games', and for the purpose I chose Nintendo Wiimotes as the control context for class designs. The final projects will be produced in Blender, using the Blender game engine.

Only having Windows machines at my disposal I wrote a basic Python script that exposes acceleromoter, tilt and button events from GlovePIE over Open Sound Control (which is natively supported by that application) to the realtime engine of Blender. I decided to go this way rather that create a bluetooth interface inside Blender for two reasons: GlovePIE is a great environment for building useful control models from raw input, it supports the network capable protocol OSC and I wanted to keep input-server like code out of Blender (for reasons you'd understand if you used Python in Blender).

GlovePIE however is more than I need on Linux alongwith the fact I don't have a Windows machine near me most of the time. I looked into various options for getting control data from a few different 'drivers' out over OSC and into Blender. Preferring to work in Python, I tried WMD but found it too awkward to develop with, although it is nothing short of comprehensive. I finally settled on the very neatly written (Linux only) libwiimote and wrote a simple little application in C to provide what I need. Here it is, wiiOSC.

To run it on your system you'll need libwiimote, Steve Harris's lightweight OSC implementation liblo, a bluetooth dongle (of course) and a bluetooth support in your kernel (most modern distro's support popular bt dongles out-of-the-box). wiiOSC will send everything libwiimote supports (IR, accelerometer, tilt, button events etc) to any computer you specify, whether to 127.0.0.1 or a machine on the internet.

wiiOSC is invoked as follows:

wiiOSC MAC remote_host port

For instance, to send wiimote data to a machine with the IP 192.168.1.102 on port 4950, I:

wiiOSC 00:19:1D:2C:31:E1 192.168.1.102 4950

To get the MAC addr of your wiimote, just use

hcitool scan.

I use Blender as my listener context but you can pickup the wiimote data in any application that supports it of course, PureData, Veejay etc. To use Blender as your listener you'll need Wiretap's Python OSC implementation and this Blender file.Enjoy.

Tuesday, February 6, 2007, 02:00 AM - code

I've picked out the packet capture part of PG and turned it into a reasonably useful and lightweight logger that should run on any UNIX system (tested on Linux). Packet length, remote IP, transaction direction, Country Code and port are all logged. Packet lengths are added over time, so you see an accumulation of traffic per IP.

Use (as root):

./pcap_collate <DEVICE> <PATH>This script will capture, log and collate TCP and UDP packets going over <DEVICE> (eth0, eth1 etc). the <PATH> argument sets the location the resulting GZIPped log will be written to, which will be updated every 1000 packets.

For this reason the script will automatically generate a new log on a new day and can be restarted at any time without losing more than 1000 packets of traffic.

The log is a dump of the dict containing comma separated fields structured as follows:

IP, direction, port, geo, lengthIt will filter out all the packets on the local network, and so is intended for use in recording Internet traffic going over a single host.

Ports to be filtered for can be set in the file config/filter.config

Stop capture with the script 'stop_capture'.

Get it here. Unpack and see the file README.txt.

Friday, February 2, 2007, 09:09 PM - dev

Two new projects are in the wings, the first of which I'll announce now.

This project takes a wooden chess-board and repurposes it as a musical pattern sequencer, where chess pieces in the course of a game define when and which notes will be played.

Each side has a different timbre to be easily distinguisable from the other. Pawns have different sounds than bishops, which in turn have different sounds than knights, and so on.

As the game progresses and pieces are removed, the score increasingly simplifies.

It'll be developed at Pickled Feet laboratories with the eminent micro-CPU expert Martin Howser.

Thursday, February 1, 2007, 06:00 PM - dev

After several months hacking on this, I've finally released PG for all three platforms simultaneously. It's now considered 'stable'. Head over the http://packetgarden.com and take it for a ride.A big thanks to: Jerub for detailed testing of the OS X PPC port, Marmoute for the OSX PPC package, Ababab for providing PPPoE test packets and extensive beta testing of the Windows port and for his feature suggestions, Davman for beta testing the Windows port and for some fine feature requests, Krishean Draconis for porting/compiling Python GeoIP for Windows, pix for optomisation advice, Marta for both her practical suggestions and eye for aesthetic detail, Atomekk for his early testing of the Win32 port and for the Win32 build of Soya, Jiba for Soya itself and all the other people that have sent bug-reports and hung out in IRC to help me fix them. A big and final thanks to Arnolfini (esp Paul Purgas) for the opportunity to learn alot about packet sniffing , this thing called 'The Internet' and a fair bit more about 3D programming along the way. I've really enjoyed the process.

Now for something completely different..

Sunday, January 14, 2007, 03:22 AM - dev

As the topic doesn't suggest, http://packetgarden.com is now live. BETA testing is also well underway, with packages for Linux, Win32 and OS X going out the door and into the hot mits of guinea pigs. if you're also up for a little beta testing, don't hesitate to get in touch!

i've had alot of questions about this project, some about privacy, some about the development and engineering side of things. for this reason i've put up an 'about Packet Garden' page here.

Thanks to open standards, I wasn't entertaining madness undertaking the task of writing for 3 different operating systems simultaneously. That said a big thanks to marmoute for help with the reasonably grisly task of packaging the OS X beta.

< rant >

It's clear that developing a free-software project on a Linux system involves substantially less guesswork than on Windows and OS X.

Determining at which point the UNIX way stops and the Apple way begins in OS X Tiger is pretty tricky, with

/System/Framework libraries often conflicting with libraries installed into /usr/local/lib or just libraries linked against locally. Because there is no ldconfig I don't have the advantage of a 'linker' and so I couldn't work out how to force my compiler to ignore libs in /System/Frameworks and link against my local installed libraries. If there is any rhyme or reason to this, or some FM I should RT, I'm keen to hear about it.Aquiring development software on the Mac is also tricky: in Debian I have access to a pool of 16000+ packages readily available, pre-packaged and tested for system compatibility. A proverbial fish out of water, I took the advice of a seasoned Apple software developer and tried Darwin Ports and Fink but both had less than a third of software I'm used to in Debian and were both pretty broken on the Mac I used anyway. So, it was back to Google, hunting around websites to find and download development libraries. I managed to find all the software ok, but as a result of finding it online, I'm never sure which version is compatible with the system as a whole - neither Windows or OS X have any compatibility policy database or watchdog in place to anticipate or deal with software conflicts portentially introduced by software not written by Microsoft or Apple respectively. This is still a major shortcoming of both OS's I think. I can't see this happening with Microsoft in future but perhaps Apple will get it together one day and create it in the form of a compatibility database/software channel or similar that allows developers to test and register their projects for compatibility against Apple's own Libraries (and ideally those by others), sorted by license. Maybe this already exists and I don't know about it.

At this stage my development environment was nearly complete, but the libs I'd downloaded were causing odd errors in GCC. It turns out I needed to download a new version of the compiler, which is bundled into a 900Mb package called XCode that contains a ton of other stuff I don't need..

Getting a development environment up and running on Windows wasn't as difficult, though it suffers the same problems surrounding finding and installing software, let alone determining whether you're allowed to redistribute it or not; if the software I'm looking at is in Debian main, I can be sure it's free-software, hence affording me the legal right of redistribution.

One great advantage of developing on Windows again, the first time in around 6 years, is having to write code for an operating system that has such poor memory management. Everything written to memory has to be addressed with such caution that it greatly improved my code in several parts, for all platforms. Linux however has excellent memory management, and gracefully dances around exceptions where possible. Perhaps developing on Windows every once and a while is, in the end, actually a healthy excerise.

That said working with anything relating to networking on Windows is absolute voodoo at the best of times. Thankfully OS X has the sanity of a UNIX base so at least I can find out what is actually going on with my network traffic and the devices it passes over.

Saturday, December 16, 2006, 04:43 PM - dev

In the course of coding Packet Garden I've resourced several external libraries, two of which deal with the packet capture part. One is Pypcap, an excellent Python interface to tcpdump's distribution of libpcap and another is dpkt.

As there were no Debian or Ubuntu packages I've packaged them and added them to a new repository where i'll host third party software i package for both these platforms in future.

Saturday, December 16, 2006, 03:12 PM - dev

As it eventuated, some measure of feature creep set in, but let's hope it's positive. The Arnolfini have given me more time, so I'm gladly taking it.

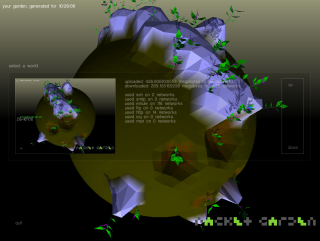

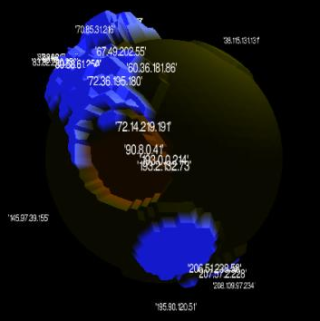

One addition is that Packet Garden now reports the geographical location of the remote machine you're accessing with 97% certainty, drawing information from an updated database on the host. This image shows it in action.

Detecting the geographical location of a remote host presents an interesting problem; IP block ranges are assigned to countries, but companies in those countries tend to do business over borders. So, while 91.64.0.0 might be an aggregation assigned to Deutschland, It is 'owned' and dealt out by an ISP called Kabel Deutschland. If the ISP were to expand into the Netherlands, there is nothing stopping Kabel Deutschland giving out German IP's to Dutch customers. It's at this point that taking a WHOIS lookup literally is the wrong approach.

I recently discovered that Maxmind provides a database that provides a reasonable level of accuracy under the LGPL and a Python interface to their GeoIP API. Right now this only works under Linux, but should work under OS X just fine. The Win32 version may have to wait until I can compile the lib for that plaform.

Friday, October 27, 2006, 10:35 PM - dev

A thousand lines of code since I last wrote, and a few hundred away from finishing Packet Garden. It's a matter of days now.

The UI code still needs some TLC - you can hear the bugs chirp at night - but there's now a basic configuration interface that saves out to a file and a history browser for loading in previously created worlds. Here's a little screenshot of the work in progress showing the world-browser overlay and the result of a busy night of giving on the eMule network.

Due to the vast number of machines a single domestic PC will reach for in a day of use I've had to do away with graphing whole unique IP's and am now logging and grouping IP's within a network range, meaning all IP's logged between the range 193.2.132.0 - 193.2.132.255 would be logged as a peak or trough at 193.2.132.255. This has exponentially dropped the total generation time of a world, including deforming the mesh and populating the garden world with flora. While I saw it as a compromise at first actually closer to an original desire to graph 'network regions'; in fact I could even go higher up and log everything under 192.2.255.255.

Thursday, September 14, 2006, 12:35 AM - dev

.. well, sort of.

Packet Garden is coming along nicely, though there are still a few challenges ahead. These are primarily related to building a Windows installer, something not my forte as I primarily develop on and for Linux systems. Packet capture on Windows is also a little different from that of Linux, though I think I have my head around this issue for the time being. So far running PG on OSX looks to be without issue. I'd like to see the IP's resolved back into domain names, so that landscape features could be read as remote sources by name. While in itself possible it's not something that is done fast enough as each lookup needs to done individually.

One thing I've been surprised by in the course of developing this project is just how many unique IP's are visited by a single host in the course of what is considered 'normal' daily use. If certain P2P software is being run, where connections to many hundred machines in the course of day is not unusual, very interesting landscapes start to emerge.

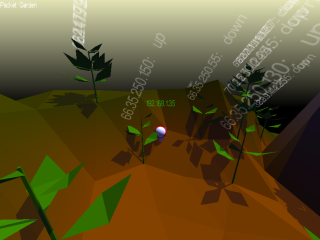

Basic plant-life now populates throughout the world, each plant representing a website visited by the user. Common protocols like SSH and FTP will generate their own items, visible on the terrain. Collision detection has also improved, aiding navigation, which is done with the cursor keys. I've added stencil shadows, a few other graphic features and am on the way to developing a basic user interface.

Previously it took a long time to actually generate the world from accumulated network traffic, but this has since been resolved thanks to some fast indexing and writing the parsed packet data directly to geometry. The advantage of the new system is that the user can start up Packet Garden at any point throughout the day and see the accumulated results of their network use.

Here are a few snaps of PG as it stands today, demoing a world generated from heavy HTTP, Skype, SoulSeek and SSH traffic over the course of a few hours. More updates will be posted shortly.

Tuesday, August 15, 2006, 10:26 PM - dev

Mid this year the Arnolfini commissioned a new piece from me by the name of Packet Garden. It's not due for delivery yet (October), but here are a few humble screenshots of early graphical tests.

I'll put together a project page soon, but in the meantime, here's a quick introduction.

A small program runs on your computer capturing information about all the servers you visit and how much raw data you download from each. None of this information is made public or shared in any way, instead it's used to grow a little unique world - a kind of 'walk-in graph' of your network use.

With each day of network activity a new world is generated, each of which are stored as tiny files for you to browse, compare and explore as time goes by. Think of them as pages from a network diary. I hope to grow simple plant-like forms from information like dropped packets also, but let's see if I get time..

I'm coding the whole project in Python, using the very excellent 3D engine, Soya3D.

Here's a gallery which I'll add to as the project develops.

Saturday, July 29, 2006, 02:24 PM - live

The HTTP Gallery in London is now showing my Second Person Shooter prototype in a show by the unassuming name of Game Play. Let's hope the little demo doesn't crumble under the weight of a thousand button-mashing FPS fans..

As soon as my commission for the Arnolfini is complete and shipped, I'll finish the _real version_. Promise.

Monday, July 24, 2006, 06:08 PM - log

The slightly unweildy title is "

Buffering Bergson: Matter and Memory in 3D Games, soon to be published in a collection of essays called 'Emerging Small Tech' by The University of Minnesota Press.

The paper was actually written some time ago and I've been meaning to upload papers I've written to this blog. Let this be the first.

Tuesday, July 11, 2006, 09:55 PM - live

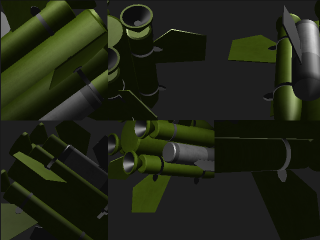

This little sketch continues my ongoing fascination with multiple viewports, for both the visual compositional possibilities and for the divided object/subjecthood produced. I spent most of a day working on 'trapped rocket', exploring the use of viewports to contain their subject.

In this experiment I've built a 'prison' out of six virtual cameras, containing an aggressive object, a rocket trying to get out. All cameras are orthagonal to the next looking inward, in turn producing a cube. Together all six cameras jail the rocket as it toils forever trying new trajectories indefinitely.

The 3.2M non-interactive, realtime project runs on a Linux system and is downloadable here. You'll need 3D graphics accelleration to run it. I wrote a script that took a gazillion screenshots and so here's the overly large gallery that inevitably resulted. Here's also a 7.4M, 1 minute clip in the Ogg Theora format.

Sunday, July 9, 2006, 04:43 PM - log

For those of you that weren't there, here are the lecture notes, in HTML, of the first half of my day-class at the FAMU in Prague. Thanks to CIANT and to the students for a fun and productive day.

Sunday, July 9, 2006, 03:36 PM - ideas

Marta and I just returned from Prague. One thing that struck us there was the large amount of time we spent in other people's photographs and videos, or at least within the bounds of their active lenses.

It's sadly inevitable that at some time a tourist mecca like Prague will capitalise on this, introducing a kind of 'scenic copyright' with a pay-per-click extension. Documenting a scene, or an item in a scene, would be legally validated as a kind of value deriving use, much in the same way as paying to see an exhibition is justified. Scenic copyright already exists, largely under the banner of anti-terrorism (bridges, important public and private buildings fall under such laws), but there are cases where pay-per-view tourism is already (inadvertently) working.

A friend Martin spoke of his experiences in St. Petersberg, where people are not allowed to photograph inside the subway, something enforced under the auspice of protecting the subway from terroristic attack. However for a small fee you can be granted full right to take photographs. Being that it's very expensive to have city wardens patrolling photographers it will no doubt be automated, where Digital Rights Management of a scene would be enforced at a hardware level.

Here's a hypothetical worst case. Similar to the chip level DRM in current generation Apple computers (with others to follow), camera manufacturers may provide a system whereby tourists must pay a certain fee allowing them to take photographs of a given scene. If a camera is found to be within a global position falling under state sanctioned scenic-copyright, the camera would either cease to function at all or simply write out watermarked, logo-defaced or blank images. If you want a photo of the Charles Bridge without "City of Praha" on it, you'll have to pay for it..

Sunday, July 9, 2006, 01:32 PM - ideas

A newspaper reporting on a blind convention (a standard for blindness?) in Dallas talks about a text to speech device allowing blind people to quickly survey text in their local environment, further refining their reading based on a series of relayed choices. The device works by taking photographs of the scene and in a fashion similar to OCR, extracts characters from the pixel array, assembles them into words and feeds them to software for vocalisation.Given the vast amount of text in any compressed urban environment, prioritising information would become necessary for a device like this to deliver information while it's still useful or else utility would be largely lost. The kind of text I'm talking about would include road-signs and building exit points.

Perhaps a position aware tagging system could be added to important signs using triangulated position from multiple RFID tags, bluetooth or other longer range high-resolution positioning system. Signs could be organised by their relative importance using 'sound icons', which in turn are binaurally mixed into a 3D sound field and unobtrusively played back over headphones (much as some fighter pilots cognitively locate missiles). The user would then hear a prioritised aural map of their textual surrounds before selecting which they should first read based on assessment of their current needs.

Thursday, June 29, 2006, 02:53 AM - dev

'Tapper' is a small virtual sound installation exploring iterative rhythmical structures using physical collision modeling and positional audio.

Three mechanical arms 'tap' a small disc, bouncing it against the ground surface. On collision each puck plays the same common sample. Because the arms move at different times polyrhythms are produced. The final output is mixed into 3D space, thus where you place yourself in the virtual room alters the emphasis of the mix.

I'll make a movie available soon and add it to this post.

Tuesday, June 27, 2006, 12:45 AM - log

many of you have written to me about the Blender --> PureData HOWTO i wrote up last year and how you've used it in your own projects. it's great to see this simple tute forming the basis of a few workshops and university classes now also (here's looking at you Andy!).it seems the Wiretap site is currently down - and has been for a while. for this reason you'll probably be missing an essential ingredient of the tutorial, the pyKit Python -> OSC interface libraries.

because i'm very nice, i've archived them here until they're back online.

Sunday, June 25, 2006, 08:55 PM - howtos

Beagle is an 'instant search' tool for Linux systems, and normally works in conjunction with the Gnome Desktop, which I don't run. being able to instantly find files from the commandline is thus something i've wanted for a while. here's a simple howto for those of you that'd like instant search from the CLI. it assumes you have a Debian based system:

sudo apt-get install beagle

create a beagle index directory:

mkdir ~/.beagle-index

index all of $HOME:

sudo beagle-build-index --target ~/.beagle-index --recursive ~/

start the beagle daemon as $USER. it automatically backgrounds:

beagled

run 'beagle-query' in the background so that it looks for live changes to your indexed file system.

beagle-query --live-query &

now create a file called 'foobar' and search for it with:

beagle-query foobar

the result should instantly appear.

it may be better to alias it as 'bfind' or something in ~/.bashrc and start 'beagled' and 'beagle-query --live-query &' from ~/.xinitrc or earlier.

enjoy

Friday, June 2, 2006, 12:58 PM - live

it's in the Ogg Theora format (plays with VLC), is 84M slim and has a couple of minutes playing time.

this video is pretty much a record of the piece as it was exhibited at Lovebytes06, the game autoplaying continuously for two weeks.

there is no human input in this work, four bots fight each other, dying and respawning, over and over again. one of the four bots sends all of it's control data to PD which in turn is used to drive a score. it's through the ears of this bot that we hear the composition driven by elements such as global-position in the map, weapon-state, damage-state and jump-pad events. For this reason game-objects and architectural elements were carefully positioned so that the flow of combat would produce common points of return (phrases) and the orchestration sounded right overall.

The scene was heavily graphically reduced so as to prioritise sound within the sensorial mix.

we have a multichannel configuration in mind for the future, whereby each bot is dedicated a pair of channels in the mix. the listener/audience would then stand at the center. perhaps we would remove video output altogether, so that all spatial and event perception was delivered aurally.

get it here.

back next