Sunday, May 20, 2007, 06:52 PM - ideas

In the last few years quantum physicists and mathematicians have told us there may well be ground to the old "many worlds" theory - that there might be several different versions of any given dimension, or groups of dimensions, at the same time. Hugh Everett III is perhaps the most well known proponent of this theory.

Perhaps a many worlds gaming system would involve several players with the task of governing one simulated world each. Each world starts out with an equal number of objects and agents all of which begin as perfect temporal copies of the next. Gameplay might involve triggering/steering chains of events to the ends of creating the least synchronous world - ie. sequences of highly unlikely events. The world with the least eventful similarity within a given period of time will create a branch, and that player wins. At the point of a branch, identical copies are made and the game begins again, continuing from the point of that new branch.

Perhaps the notion of 'entanglement' could also be used as a strategic means of playing great similarity to an advantage: by successfully mirroring an event in another player's world entanglement could be triggered, giving the antagonist brief remote control over events therein.

While it could easily take on the form of a 2d game or orthographic sim-like title (like Habbo Hotel) the real work would be in creating a procedural event modeling system with an internal sense of consequence and wide potential for very absurd outcomes. Scenarios for an opening game need not be large at all - ordering a falafel or getting a haircut could give plenty of material to begin with.

12-05-07 Updated for clarity.

| permalink

Saturday, May 5, 2007, 02:30 PM - ideas

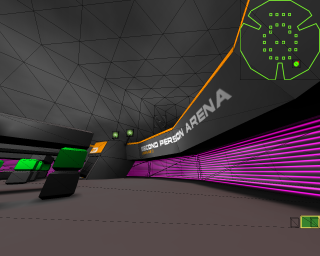

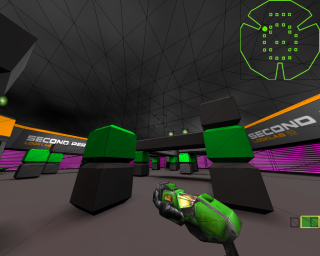

Any time I had leading up to Gameworld was spent working on 2ndPS2 (read Second Person Shooter for 2-players). I'd been meaning to make this little mod for years and decided that Gameworld was as good an opportunity as any to put the idea to the test.Unlike the previous incarnation - a simple prototype written in Blender that far too many people got excited about - your views are switched with another player, not a bot. You are looking through their view and you through theirs. When they press the key for forward on the computer, the view you're looking through responds accordingly, and vice versa. As it's all networked it's possible to play over the internet just as you would a normal multiplayer Quake3 game.

Naturally this makes it very tricky to actually play the thing as you can only navigate yourself with effect when you can see yourself: ie. you are within view of your opponent's gaze. In the few tests I did of 2ndPS2 before putting it on show people with no experience playing first-person-shooter games struggled with this reversal of the control paradigm very much, and so at the advice of Marta I built a sort of visual radar system so you could see where the other player was and vice versa.

This worked really well as far as reducing the confusion people would've had otherwise: in an exhibition context of the scale of Laboral people have very short attention spans and so a bang-for-buck approach like this was perhaps necessary. In practice it actually stood up reasonably well to these ends.

Conceptually however using this radar-helper is a bit of a compromise: why switch the views at all if you're providing a means for people to avoid engaging with a primary dislocation of perspective as an active part of the interface?

With this in mind I've decided to replace the visual radar with a sound-based system. You can hear where you are in the scene in relation to the view of your opponent - the view you're looking through. Events like walking into walls and picking up items are distinct sound events. The orientation of yourself out there in the scene is represented as changes to the pitch and harmonics of a continuous signal.

While I already had much of this auditory feedback system already implemented I didn't use it at Laboral as it was far from ready for use.

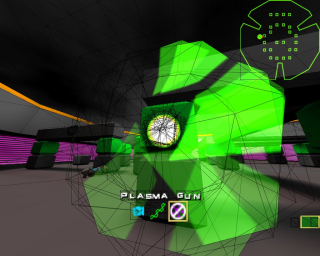

I used ioquake3 to make 2ndPS2, spending a fair bit of time coming up with new rendering effects, sprites, weapons and other bits and bobs simply because I can't help myself when I have the source code in front of me (ahh the Garden Paths). Admittedly I could've simply taken a stock Quake3 map and consdered this strictly as a conceptual piece, but when I started this I had the distinct feeling that I was beginning something much larger. Perhaps this is still the case.

Where to from here? Perhaps a mod that allows many people to play simultaneously; a hoppable second-person view matrix allowing you to change to any view other than your own. There would be a strategic component where views themselves are resources that need to be managed toward the ends of finding yourself in the arena long enough to gain control, bumping others over to a new view as required. Weapons could include a POV-grenade that shuffles all the current views of players within impact range. FOV-weapons (i've already made a couple) that suddenly throw the target into orthogonal views or warp the current camera as though the world were a rippling surface.

This sort of stuff I wanted to save for another project entirely - a strategic multiplayer game where by you must find your first-person view in a large architecturally distributed view matrix - but Eddo suggested it would probably make a pretty nice addition to 2ndPS2.

Perhaps I will do this.. I'm always open to other suggestions and even collaborations.

Sunday, July 9, 2006, 03:36 PM - ideas

Marta and I just returned from Prague. One thing that struck us there was the large amount of time we spent in other people's photographs and videos, or at least within the bounds of their active lenses.

It's sadly inevitable that at some time a tourist mecca like Prague will capitalise on this, introducing a kind of 'scenic copyright' with a pay-per-click extension. Documenting a scene, or an item in a scene, would be legally validated as a kind of value deriving use, much in the same way as paying to see an exhibition is justified. Scenic copyright already exists, largely under the banner of anti-terrorism (bridges, important public and private buildings fall under such laws), but there are cases where pay-per-view tourism is already (inadvertently) working.

A friend Martin spoke of his experiences in St. Petersberg, where people are not allowed to photograph inside the subway, something enforced under the auspice of protecting the subway from terroristic attack. However for a small fee you can be granted full right to take photographs. Being that it's very expensive to have city wardens patrolling photographers it will no doubt be automated, where Digital Rights Management of a scene would be enforced at a hardware level.

Here's a hypothetical worst case. Similar to the chip level DRM in current generation Apple computers (with others to follow), camera manufacturers may provide a system whereby tourists must pay a certain fee allowing them to take photographs of a given scene. If a camera is found to be within a global position falling under state sanctioned scenic-copyright, the camera would either cease to function at all or simply write out watermarked, logo-defaced or blank images. If you want a photo of the Charles Bridge without "City of Praha" on it, you'll have to pay for it..

Sunday, July 9, 2006, 01:32 PM - ideas

A newspaper reporting on a blind convention (a standard for blindness?) in Dallas talks about a text to speech device allowing blind people to quickly survey text in their local environment, further refining their reading based on a series of relayed choices. The device works by taking photographs of the scene and in a fashion similar to OCR, extracts characters from the pixel array, assembles them into words and feeds them to software for vocalisation.Given the vast amount of text in any compressed urban environment, prioritising information would become necessary for a device like this to deliver information while it's still useful or else utility would be largely lost. The kind of text I'm talking about would include road-signs and building exit points.

Perhaps a position aware tagging system could be added to important signs using triangulated position from multiple RFID tags, bluetooth or other longer range high-resolution positioning system. Signs could be organised by their relative importance using 'sound icons', which in turn are binaurally mixed into a 3D sound field and unobtrusively played back over headphones (much as some fighter pilots cognitively locate missiles). The user would then hear a prioritised aural map of their textual surrounds before selecting which they should first read based on assessment of their current needs.