Thursday, April 2, 2009, 01:54 AM - dev

A little demo of the Artvertiser at work on a postcard. Here I'm testing the tracking in relatively low-light and during plenty of movement.Video Postcard from Julian Oliver on Vimeo.

| permalink

Friday, March 6, 2009, 07:19 PM - dev

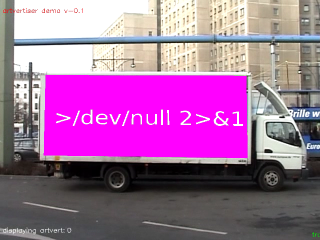

I've just posted a video documenting the progress of The Artvertiser.

Aside from the videos below there's an additional clip of version 0.2 at work on a billboard. You can also see a new 'in world' artvert labeling system at work..

Enjoy!

Thursday, February 26, 2009, 08:22 PM - dev

I've made good progress on The Artvertiser software, with several live tests out in the field proving to be successful. Clara and Diego are working on the hardware, and to those ends we've ordered a couple of Beagle Boards for the handheld device (binoculars).

Here are a couple of videos of recent field tests:

Callao, Madrid, 18M AVI

Heinrich Heine Platz, Berlin, 16M AVI

Alexander Platz, Berlin, 51M AVI

Nokia and Google, if you're reading, feel free to send me some hardware so I can target your platforms (Nokia, your N96 and Google, an HTC Magic +/or Texas Instruments OMAP34x-II MDP Zoom w/Android would be lovely. TY!)..

Monday, July 7, 2008, 02:54 PM - dev

I've documented 2 'software triptyches' I made in 2006, and one I recently finished, here.

Enjoy.

Monday, April 14, 2008, 12:56 PM - dev

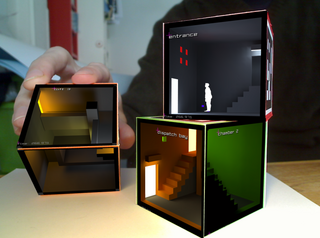

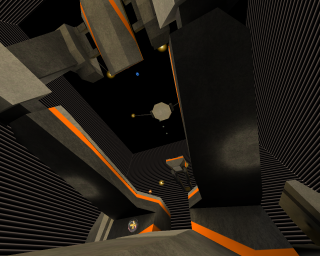

It's been a good couple of weeks working on levelHead, in preparation for the Homo Ludens Ludens (aka "Man, the player") exhibition at LABoral, Gijon, Asturias, Spain.

The controls are far more robust and a great many bugs have been slayed (in a caring and respectful way). There are now 3 playable levels and a bunch of user-notications and other goodies that aid navigation.

At the 11th hour pix came on board to migrate the tracker from ARToolkit to ARToolkitPlus, which has worked splendidly: tracker stability is far better than it was with my previous ARToolkit implementation.

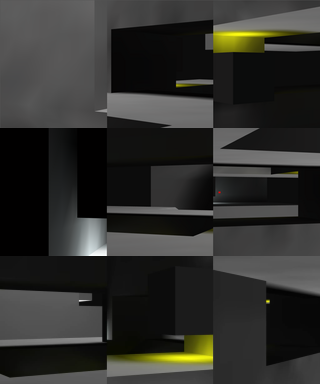

While working together he chose to go on a bug hunt, chasing in particular a graphic glitch where two rooms were being drawn at the same time. I'd written the first version with the intention of just one room being drawn at a time (one marker to be tracked for simplicity) but with the aid of a stencil-buffer he managed to make the use of the likely occurence that two or even three rooms can be seen at once:

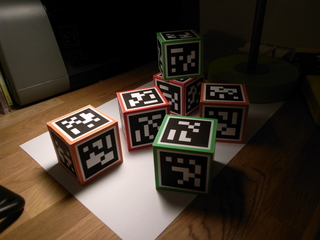

Development hasn't all been in code, I also have some lovely new cubes:

So at the end of a fairly fierce two weeks of programming, levelHead is ready to be unleashed on the Asturians, where it will be installed for 5 months. For those that can't make it to Gijon, levelHead will next be exhibited at Sonar, Barcelona this year.

More about that later.

Wednesday, February 6, 2008, 10:54 PM - dev

More Artvertising..

The below two videos show basic live image substitution of a postcard, seen by my webcam.

This clip demonstrates playing a movie 'on' the postcard and this video demonstrates cycling through a variety of images while attempting to emulate the local lighting conditions.

It's still not as stable as I'd like but nonetheless it's getting there.

The idea, of course, isn't to substitute images on arbitrary postcards but on big billboards, bus-stops and sign advertising in cities. I do have a clip of a substitution of a road-side sign but it's a bit rubbish due to it being quite dark at the time.

As opposed to (most) other augmented reality techniques - which use specially designed black-and-white fiducial markers - here the image itself is the marker.. This is much more processor intensive than normal marker tracking.

Naturally I'd love to see this working on a mobile phone but having played with a Nokia N95 recently - perhaps the best-specc'd phone for this sort of work - it's clear that fast image detection is well beyond the scope of current phone hardware; at least at more than a few frames a second. That's not to say standard augmentation using fiducial markers doesn't work fine on such a phone (like those used with ARToolkitPlus)..

Nonetheless, a UMPC built into a pair of binoculars is probably a bit more fun out on the field anyway.

Monday, October 15, 2007, 06:25 PM - dev

I've just finished the first beta (really an alpha) of my little AR/tangible-interface game levelHead. Admittedly there's not much up on the project page yet, but here's a YouTube video that conveys the general idea pretty well. It still has glitches but i'll iron those out soon enough.

At some point i also want to look into the idea of using invisible markers (have a few promising possibilities there) or full colour picture markers (also possible, though requires much more CPU braun).

Here's a better quality video in the OGG/Theora format (plays in VLC).

Enjoy.

Thursday, August 2, 2007, 06:09 PM - dev

Here are packages of Packet Garden for Ubuntu 7.04.

To install just download, double-click and go. You might want to install dpkt and pycap first (also found at the above link).

Monday, June 18, 2007, 03:56 PM - dev

Aside from moving country I've just finished developing a project at Interactivos at the excellent Media Lab Madrid. I tried to spend as

much time as possible there but alas had chores like setting up a new apartment. Nonetheless I had a lot of fun.

Simone Jones was one of the instructors - someone who has a great deal of experience with electronics, especially in the context of motorised cameras. Because my previously offered project proved to be unfeasible in the time frame and Simone wanted to work on something, we decided to team up.

We threw around several ideas, mostly to do with 'editing' the existing architecture of the exhibition space by adding an extra room seen only through a CCTV like display - a kind of a haunting. However, as the lighting conditions of the space were changing so frequently in the days leading up to the group-show, we couldn't pull this off. For this reason we decided to work small - really small.

The idea was simple, augment a solid cube with 6 little rooms such that the cube becomes a tangible interface for navigating through an architecture: a mind-game - "How are the rooms connected?"

I added some code to ARToolkit so that it could support occlusion - ie hiding virtual objects 'behind', or 'inside', real objects and used a simple mask object to aid the process.

Here's a little clip in the OGG Theora format (plays in VLC) that perhaps better explains it all.

Simone and I are already talking about a large version of this for a later show. In the meantime I'm adapting it into a small game where you must help a character to escape the block by leading it from room to room: by turning the cube you select the next room the character will enter. Several cubes can be used so that when a character is finally led to the exit door of one cube it will jump to the entrance of another cube (or 'level') placed nearby. I plan to make this puzzle game around 5 cubes long. More about that later..

The exhibition uses a Sony EyeToy on an Ubuntu Linux system. Worth mentioning is that I used the super Rastageeks OV51x-JPEG drivers: a 640x480 webcam on Linux for less than EUR40? Look no further!

Addendum 19-06-07 For a long list of reasons I have never found character animation a very satisfying task - probably due to me being quite horrible at it. For this reason I'm very open to collaborating with a good character animator on this project. The data needs to come from Blender via the osgCal3D exporter (shipped with recent versions of Blender).. Get in touch we me by interpreting this image.

Monday, March 19, 2007, 11:23 PM - dev

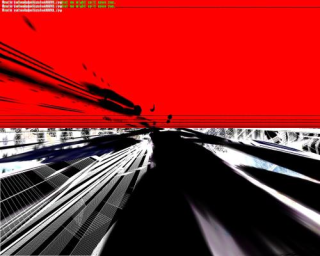

The piece I made while serving as Artist in Residence at Georgia Tech finally concluded to be 'ioq3aPaint'; an automatic painting mechanism using QuakeIII where software agents in perpetual combat drag texture data as they fight, rendering attack vectors as graphic gesture. Here's a short clip (64M, 4"50', Ogg Theora) of one of the many iterations. It will play in VLC:The exhibition was breif but the opening night and talk brought many thoughtful questions. Game designer and theorist Michael Nitsche was responsible for alot of great commentary, some of which he wrote about here.

ioq3aPaint develops upon q3aPaint quite heavily, introducing a fresh palette and providing audiences with the ability to cycle through palettes in real time.

Not far off is the ability to send screenshots straight to a printer; the idea being that on the opening night of a future exhibition audience could take screenshots while the abstractions evolve which are in turn sent off to a large format canvas printer. The show itself would continue the following day as a normal painting exhbition.

If you're interested in playing around with QuakeIII as a painting tool you can get fairly far working only in the console. Play with

r_fov, r_drawWorld and r_showTris especially once you've 'team s' and there are a few bots in the scene. Therein start manipulating GL functions in code/render/ and drive them by adding new keybinds to code/client/cl_input.c.A big thanks to all those in the LCC department for making it happen - an extra special thanks to Celia Pearce for setting up the initiative in the first place. Celia is one of the few people really pushing experimental game development practices in both institutional and industry contexts, and has been doing so for some years. Cheers to that.

Friday, February 2, 2007, 09:09 PM - dev

Two new projects are in the wings, the first of which I'll announce now.

This project takes a wooden chess-board and repurposes it as a musical pattern sequencer, where chess pieces in the course of a game define when and which notes will be played.

Each side has a different timbre to be easily distinguisable from the other. Pawns have different sounds than bishops, which in turn have different sounds than knights, and so on.

As the game progresses and pieces are removed, the score increasingly simplifies.

It'll be developed at Pickled Feet laboratories with the eminent micro-CPU expert Martin Howser.

Thursday, February 1, 2007, 06:00 PM - dev

After several months hacking on this, I've finally released PG for all three platforms simultaneously. It's now considered 'stable'. Head over the http://packetgarden.com and take it for a ride.A big thanks to: Jerub for detailed testing of the OS X PPC port, Marmoute for the OSX PPC package, Ababab for providing PPPoE test packets and extensive beta testing of the Windows port and for his feature suggestions, Davman for beta testing the Windows port and for some fine feature requests, Krishean Draconis for porting/compiling Python GeoIP for Windows, pix for optomisation advice, Marta for both her practical suggestions and eye for aesthetic detail, Atomekk for his early testing of the Win32 port and for the Win32 build of Soya, Jiba for Soya itself and all the other people that have sent bug-reports and hung out in IRC to help me fix them. A big and final thanks to Arnolfini (esp Paul Purgas) for the opportunity to learn alot about packet sniffing , this thing called 'The Internet' and a fair bit more about 3D programming along the way. I've really enjoyed the process.

Now for something completely different..

Sunday, January 14, 2007, 03:22 AM - dev

As the topic doesn't suggest, http://packetgarden.com is now live. BETA testing is also well underway, with packages for Linux, Win32 and OS X going out the door and into the hot mits of guinea pigs. if you're also up for a little beta testing, don't hesitate to get in touch!

i've had alot of questions about this project, some about privacy, some about the development and engineering side of things. for this reason i've put up an 'about Packet Garden' page here.

Thanks to open standards, I wasn't entertaining madness undertaking the task of writing for 3 different operating systems simultaneously. That said a big thanks to marmoute for help with the reasonably grisly task of packaging the OS X beta.

< rant >

It's clear that developing a free-software project on a Linux system involves substantially less guesswork than on Windows and OS X.

Determining at which point the UNIX way stops and the Apple way begins in OS X Tiger is pretty tricky, with

/System/Framework libraries often conflicting with libraries installed into /usr/local/lib or just libraries linked against locally. Because there is no ldconfig I don't have the advantage of a 'linker' and so I couldn't work out how to force my compiler to ignore libs in /System/Frameworks and link against my local installed libraries. If there is any rhyme or reason to this, or some FM I should RT, I'm keen to hear about it.Aquiring development software on the Mac is also tricky: in Debian I have access to a pool of 16000+ packages readily available, pre-packaged and tested for system compatibility. A proverbial fish out of water, I took the advice of a seasoned Apple software developer and tried Darwin Ports and Fink but both had less than a third of software I'm used to in Debian and were both pretty broken on the Mac I used anyway. So, it was back to Google, hunting around websites to find and download development libraries. I managed to find all the software ok, but as a result of finding it online, I'm never sure which version is compatible with the system as a whole - neither Windows or OS X have any compatibility policy database or watchdog in place to anticipate or deal with software conflicts portentially introduced by software not written by Microsoft or Apple respectively. This is still a major shortcoming of both OS's I think. I can't see this happening with Microsoft in future but perhaps Apple will get it together one day and create it in the form of a compatibility database/software channel or similar that allows developers to test and register their projects for compatibility against Apple's own Libraries (and ideally those by others), sorted by license. Maybe this already exists and I don't know about it.

At this stage my development environment was nearly complete, but the libs I'd downloaded were causing odd errors in GCC. It turns out I needed to download a new version of the compiler, which is bundled into a 900Mb package called XCode that contains a ton of other stuff I don't need..

Getting a development environment up and running on Windows wasn't as difficult, though it suffers the same problems surrounding finding and installing software, let alone determining whether you're allowed to redistribute it or not; if the software I'm looking at is in Debian main, I can be sure it's free-software, hence affording me the legal right of redistribution.

One great advantage of developing on Windows again, the first time in around 6 years, is having to write code for an operating system that has such poor memory management. Everything written to memory has to be addressed with such caution that it greatly improved my code in several parts, for all platforms. Linux however has excellent memory management, and gracefully dances around exceptions where possible. Perhaps developing on Windows every once and a while is, in the end, actually a healthy excerise.

That said working with anything relating to networking on Windows is absolute voodoo at the best of times. Thankfully OS X has the sanity of a UNIX base so at least I can find out what is actually going on with my network traffic and the devices it passes over.

Saturday, December 16, 2006, 04:43 PM - dev

In the course of coding Packet Garden I've resourced several external libraries, two of which deal with the packet capture part. One is Pypcap, an excellent Python interface to tcpdump's distribution of libpcap and another is dpkt.

As there were no Debian or Ubuntu packages I've packaged them and added them to a new repository where i'll host third party software i package for both these platforms in future.

Saturday, December 16, 2006, 03:12 PM - dev

As it eventuated, some measure of feature creep set in, but let's hope it's positive. The Arnolfini have given me more time, so I'm gladly taking it.

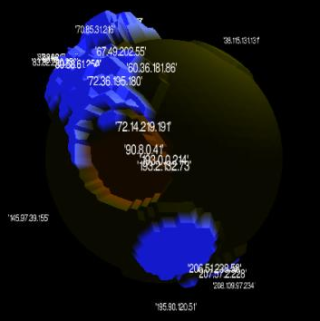

One addition is that Packet Garden now reports the geographical location of the remote machine you're accessing with 97% certainty, drawing information from an updated database on the host. This image shows it in action.

Detecting the geographical location of a remote host presents an interesting problem; IP block ranges are assigned to countries, but companies in those countries tend to do business over borders. So, while 91.64.0.0 might be an aggregation assigned to Deutschland, It is 'owned' and dealt out by an ISP called Kabel Deutschland. If the ISP were to expand into the Netherlands, there is nothing stopping Kabel Deutschland giving out German IP's to Dutch customers. It's at this point that taking a WHOIS lookup literally is the wrong approach.

I recently discovered that Maxmind provides a database that provides a reasonable level of accuracy under the LGPL and a Python interface to their GeoIP API. Right now this only works under Linux, but should work under OS X just fine. The Win32 version may have to wait until I can compile the lib for that plaform.

Friday, October 27, 2006, 10:35 PM - dev

A thousand lines of code since I last wrote, and a few hundred away from finishing Packet Garden. It's a matter of days now.

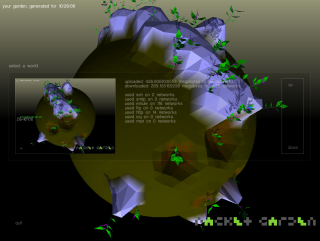

The UI code still needs some TLC - you can hear the bugs chirp at night - but there's now a basic configuration interface that saves out to a file and a history browser for loading in previously created worlds. Here's a little screenshot of the work in progress showing the world-browser overlay and the result of a busy night of giving on the eMule network.

Due to the vast number of machines a single domestic PC will reach for in a day of use I've had to do away with graphing whole unique IP's and am now logging and grouping IP's within a network range, meaning all IP's logged between the range 193.2.132.0 - 193.2.132.255 would be logged as a peak or trough at 193.2.132.255. This has exponentially dropped the total generation time of a world, including deforming the mesh and populating the garden world with flora. While I saw it as a compromise at first actually closer to an original desire to graph 'network regions'; in fact I could even go higher up and log everything under 192.2.255.255.

Thursday, September 14, 2006, 12:35 AM - dev

.. well, sort of.

Packet Garden is coming along nicely, though there are still a few challenges ahead. These are primarily related to building a Windows installer, something not my forte as I primarily develop on and for Linux systems. Packet capture on Windows is also a little different from that of Linux, though I think I have my head around this issue for the time being. So far running PG on OSX looks to be without issue. I'd like to see the IP's resolved back into domain names, so that landscape features could be read as remote sources by name. While in itself possible it's not something that is done fast enough as each lookup needs to done individually.

One thing I've been surprised by in the course of developing this project is just how many unique IP's are visited by a single host in the course of what is considered 'normal' daily use. If certain P2P software is being run, where connections to many hundred machines in the course of day is not unusual, very interesting landscapes start to emerge.

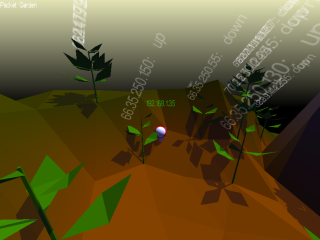

Basic plant-life now populates throughout the world, each plant representing a website visited by the user. Common protocols like SSH and FTP will generate their own items, visible on the terrain. Collision detection has also improved, aiding navigation, which is done with the cursor keys. I've added stencil shadows, a few other graphic features and am on the way to developing a basic user interface.

Previously it took a long time to actually generate the world from accumulated network traffic, but this has since been resolved thanks to some fast indexing and writing the parsed packet data directly to geometry. The advantage of the new system is that the user can start up Packet Garden at any point throughout the day and see the accumulated results of their network use.

Here are a few snaps of PG as it stands today, demoing a world generated from heavy HTTP, Skype, SoulSeek and SSH traffic over the course of a few hours. More updates will be posted shortly.

Tuesday, August 15, 2006, 10:26 PM - dev

Mid this year the Arnolfini commissioned a new piece from me by the name of Packet Garden. It's not due for delivery yet (October), but here are a few humble screenshots of early graphical tests.

I'll put together a project page soon, but in the meantime, here's a quick introduction.

A small program runs on your computer capturing information about all the servers you visit and how much raw data you download from each. None of this information is made public or shared in any way, instead it's used to grow a little unique world - a kind of 'walk-in graph' of your network use.

With each day of network activity a new world is generated, each of which are stored as tiny files for you to browse, compare and explore as time goes by. Think of them as pages from a network diary. I hope to grow simple plant-like forms from information like dropped packets also, but let's see if I get time..

I'm coding the whole project in Python, using the very excellent 3D engine, Soya3D.

Here's a gallery which I'll add to as the project develops.

Thursday, June 29, 2006, 02:53 AM - dev

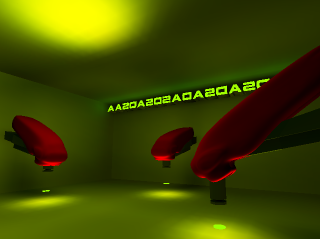

'Tapper' is a small virtual sound installation exploring iterative rhythmical structures using physical collision modeling and positional audio.

Three mechanical arms 'tap' a small disc, bouncing it against the ground surface. On collision each puck plays the same common sample. Because the arms move at different times polyrhythms are produced. The final output is mixed into 3D space, thus where you place yourself in the virtual room alters the emphasis of the mix.

I'll make a movie available soon and add it to this post.

Sunday, May 14, 2006, 02:16 AM - dev

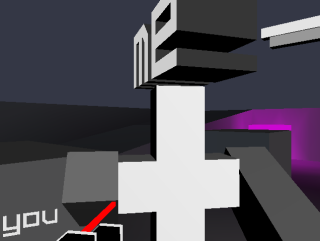

thanks to a generous commission from Cybersonica, pix and I had the resources we needed to throw a month at creating an entirely new version of fijuu, the engine, artwork and audio got a complete overhaul.

development in such a short period of time was also testimony to the power of skype as a collaboration tool. we spent around 8-10 hours in the IM each day sending each other patches, artwork, code snippets etc right up until the point of walking out the door with Fijuu and Ubuntu on a shiny new Shuttle. if only Skype or an equivalent IM and VoIP tool had code-formatting and an sketch-block with drawing tools and SVG export..

the piece was installed at Phonica in London where it'll be on show until the 26th, alongside sister shows around London.

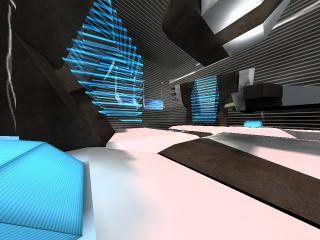

here are some screenshots of the finished work and most imporantly here's the sourcecode if you're at all interested in compiling it:

pix has put together a README listing dependencies. don't leave home without it!

darcs pull http://fijuu.com/darcs

Thursday, February 16, 2006, 05:07 AM - dev

Thanks to Ed Carter and the Lovebytes folk, q3apd is finally getting a good showing in an installation context; to date it's largely been appreciated in a performance setting. Unlike prior appearances, q3apd will this time be played entirely by bots which is so far proving to be an interesting challenge.

While these bots are in mortal combat they are generating a lot of data. q3apd uses this data to generate a composition, in essense providing a means to 'hear' the events of combat as a network of flows of influence.

To do this, every twitch, turn and change of state in these bots is passed to the program PD where the sonification is performed; agent vectors become notes, orientations accents and local positions become pitch. Events like jumpads, teleporters, bot damage and weapon switching all add compositional detail (gestures you might say).

Once the eye has become accustomed to the relationships between audible signal and the events of combat, visual material can be sequentially removed with a keypress, bringing the sonic description to the foreground.

I've been working on a new environment for the piece and helping bots come to terms with the arena. Here's a preview shot. When the work goes live I'll post a video.

Thursday, February 2, 2006, 10:42 PM - dev

i've been working on a series of moving images designed for use on very large screens.

anagram 1

this composition takes about 10 minutes to complete a cycle and runs indefinitely. it's called "anagram 1" and is designed as an 'recombinational' triptych. it is not interactive in any way.

this is the binary [2.5M]. it runs on a Linux system with graphic acceleration. just run 'anagram1' after unpacking the archive.

here's a small gallery of screenshots.

anagram 2

"anagram 2" takes much longer than the previous work to complete a cycle and spreads the contents of three separate viewports from one to the next to create complex depths of field. using orthoganal cameras, cavities compress and unfold as the architecture pushes through itself. the format of anagram2 is also natively larger.

here's the binary [2.4M]. it runs on a Linux system with graphic acceleration. run 'anagram2' after unpacking the archive.

here's a gallery of anagram 2.

Thursday, February 2, 2006, 10:18 PM - dev

2002-3

q3apaint uses software bots in Quake3 arena to dynamically create digital paintings.

I use the term 'paintings' as the manner in which colour information is applied is very much like the layering of paint given by brush strokes on the canvas. q3apaint exploits a redraw function in the Quake3 Engine so that instead of a scene refreshing it's content as the software camera moves, the information from past frames it allowed to persist.

As bots hunt each other, they produce these paintings. turning a combat arena into a shower of gestural artwork.

The viewer may become the eyes of any given bot as they paint and manipulate the brushes they use. In this way, q3apaint offers a

symbiotic partnership between player and bot in the creation of artwork.

The work is intended for installation in a gallery or public context with a printer. Using a single keypress, the user can capture a frame of the human-bot collaboration and take it home with them.

Visit the gallery.

Thursday, February 2, 2006, 10:07 PM - dev

2005

the first person perspective has always been priveledged with the pointillism (or synchronicity) of a physiology that travels with the will in some shape or form, "I act from where I perceive" and "I am on the inside looking out". in this little experiment however, you are on the outside looking in.

in this take on the 2nd Person Perspective, you control yourself through the eyes of the bot, but you do not control the bot; your eyes have effectively been switched. naturally this makes action difficult when you aren't within the bot's field of view. so, both you and the bot (or other player) will need to work together, to combat each other.

there is no project page yet, but downloads and more information are available here.

Thursday, February 2, 2006, 10:06 PM - dev

1999-2000

this was my first successful foray into the use of games as performance environments.

it project uses a modified QuakeII computer gaming engine as an environment for mapping and activating audio playback, largely as raw trigger events. it

works well both live and as an installation. I later implemented this project in Half-Life.

the project webpage, and movie are here.

Thursday, February 2, 2006, 10:05 PM - dev

2002/3. designed by Katherine Neil, Kate Wild and myself. built by the EFW team.

EFW is a Half-Life modification set in the real Australian Woomera detention center. playing as a detainee in subhuman conditions, the goal is to escape.

EFW has appeared in television, newspapers and online journals worldwide. EFW was publically condemned by the Australian Minister for Immigration (the man

responsible for the racist detention regime in Australia), Phillip Ruddock.

Screenshot above (and entire map) developed by Steven Honnegger.

Read more on the project website.

Thursday, February 2, 2006, 10:04 PM - dev

2001->3 selectparks team.

acmipark is a virtual environment that contains a replication of the real world architecture of the Australian Centre for the Moving Image, Melbourne Aust

ralia. acmipark extends the real world architecture of Federation Square into a fantastic abstraction. Subterranean virtual caves hang below the surface,

and a natural landscape replaces the Central Business District in which ACMI actually resides.

It was inspired by the capacity of massive multiplayer online games such as Anarchy Online to create both a sense of place and a sense of community. It is

the first multiplayer, site-specific games-based intervention into public space.

upto 64 players can play simultaneously and though there is a free Win32 client, the game is best played on site at the ACMI center in Melbourne.

visit the project page here or check out this movie.

sadly there wasn't money for a Linux/OSX port and parallel development for these platforms was out of the question as we were sponsored to use Cr

iterion's Renderware.

Thursday, February 2, 2006, 10:03 PM - dev

pix and I, 2004.

fijuu is a 3D, audio/visual performance engine. using a game engine, the player(s) of fijuu dynamically manipulate 3D instruments with PlayStation2-style

gamepads to make improvised music.

fijuu is built ontop of the open source game engine 'Nebula' and runs on Linux. fijuu (we hope) will one day be released as a live CD Linux proj

ect, so players can simply boot up their PC with a PS2-style gamepad plugged in, and play without installing anything (regardless of operating system).

Among several appearances, fijuu was performed in Sonar2004 and received an Honourable Mention at Transmediale2005.

read more about the project, see screenshots/movies etc here.

Thursday, February 2, 2006, 10:02 PM - dev

pix and i 2003.

q3apd uses activity in QuakeIII as control data for the realtime audio synthesis environment Pure Data. we have developed a small set of modules that once installed into the appropriate directory, pipe bot and player location, view angle, weapon state and local texture over a network to Pure Data, which is listening on a given port. once this very rich control data is available in PD, it can then be used to synthesise audio, or whatever. the images below are

from a map "gaerwn" that delire put together as a performance environment for q3apd. features in the map like it's dimensions, bounce pads and the placement of textures all make it a dynamic environment for jamming with q3apd. of course any map can be used.

to use q3apd first grab the latest Quake III point release. we used version 1.32. make a directory called 'pd' in /usr/local/games/quake3/, or wherever quake3 is installed on your system [this is the default install path on a Debian system]. then, cd into this new directory and unzip this package of modules ensure that this machine is on the same network as the box with PD on it. run the q3apd.pd in PD on this machine [ping it to be sure]. in the terminal, exec Quake III with:

yourbox:~$ quake3 +set sv_pure 0 +set fs_game pd +devmap (yourmap)

once the map is loaded pull down the console in q3a with the '~' key and type:

/set fudi_hostname localhost

/set fudi_port 6662

/set fudi_open 1

'netsend', an object used in PD and MaxMSP systems to send UDP data over a network, is is now broadcasting. at this stage you should see plenty of activity in the pd patch on the PD machine. enjoy!

system requirements

machine 1 --> Linux / Acellerated Graphics Card / Quake III Arena / ethernet adapter or modem

back next